__________________________________________________________________

Version 4.9, September 19, 2022

Copyright ©2002–2022 Krzysztof Kuchcinski and Radosław Szymanek. All rights reserved.

JaCoP library provides constraint programming paradigm implemented in Java. It provides primitives to define finite domain (FD) variables, constraints and search methods. The user should be familiar with constraint (logic) programming (CLP) to be able to use this library. A good introduction to CLP can be found, for example, in [18].

JaCoP library provides most commonly used primitive constraints, such as equality, inequality as well as logical, reified and conditional constraints. It contains also number of global constraints. These constraints can be found in most commercial CP systems [4, 25, 6, 22]. Finally, JaCoP defines also decomposable constraints, i.e., constraints that are defined using other constraints and possibly auxiliary variables.

JaCoP library can be used by providing it as a JAR file or by specifying access to a directory containing all JaCoP classes. An example how program Main.java, which uses JaCoP, can be compiled and executed in the Linux like environment is provided below.

javac -classpath .:path_to_JaCoP Main.java java -classpath .:path_to_JaCoP Main

or

javac -classpath .:JaCoP.jar Main.java java -classpath .:JaCoP.jar Main

Alternatively one can specify the class path variable.

In Java application which uses JaCoP it is required to specify import statements for all used classes from JaCoP library. An example of the import statements that import the whole subpackages of JaCoP at once is shown below.

import org.jacop.core.*; import org.jacop.constraints.*; import org.jacop.search.*;

Obviously, different Java IDE (Eclipse, IntelliJ, NetBeans, etc.) and pure Java build tools (e.g., Ant, Maven) can be used for JaCoP based application development.

Consider the problem of graph coloring as depicted in Fig. 1.1. Below, we provide a simplistic program with hard-coded constraints and specification of the search method to solve this particular graph coloring problem.

import org.jacop.core.*;

import org.jacop.constraints.*;

import org.jacop.search.*;

public class Main {

static Main m = new Main ();

public static void main (String[] args) {

Store store = new Store(); // define FD store

int size = 4;

// define finite domain variables

IntVar[] v = new IntVar[size];

for (int i=0; i<size; i++)

v[i] = new IntVar(store, "v"+i, 1, size);

// define constraints

store.impose( new XneqY(v[0], v[1]) );

store.impose( new XneqY(v[0], v[2]) );

store.impose( new XneqY(v[1], v[2]) );

store.impose( new XneqY(v[1], v[3]) );

store.impose( new XneqY(v[2], v[3]) );

// search for a solution and print results

Search<IntVar> search = new DepthFirstSearch<IntVar>();

SelectChoicePoint<IntVar> select =

new InputOrderSelect<IntVar>(store, v,

new IndomainMin<IntVar>());

boolean result = search.labeling(store, select);

if ( result )

System.out.println("Solution: " + v[0]+", "+v[1] +", "+

v[2] +", "+v[3]);

else

System.out.println("*** No");

}

}

This program produces the following output indicating that vertices v0, v1 and v3 get different colors (1, 2 and 3 respectively), while vertex v3 is assigned color number 1.

Solution: v0=1, v1=2, v2=3, v3=1

The problem is specified with the help of variables (FDVs) and constraints over these variables. JaCoP support both finite domain variables (integer variables) and set variables. Both variables and constraints are stored in the store (Store). The store has to be created before variables and constraints. Typically, it is created using the following statement.

Store store = new Store();

The store has large number of responsibilities. In short, it knits together all components required to model and solve the problem using JaCoP (CP methodology). One often abused functionality is printing method. The store has redefined the method toString(), but use it with care as printing large stores can be a very slow/memory consuming process.

In the next sections we will describe how to define FDVs and constraints.

Variable X :: 1..100 is specified in JaCoP using the following general statement (assuming that we have defined store with name store). Clerly, we required to have created store before we can create variables as any constructor for variable will require providing the reference to store in which the variable is created.

IntVar x = new IntVar(store, "X", 1,100);

One can access the actual domain of FDV using the method dom(). The minimal and maximal values in the domain can be accessed using min() and max() methods respectively. The domain can contain “holes”. This type of the domain can be obtained by adding intervals to FDV domain, as provided below:

IntVar x = new IntVar(store, "X", 1, 100);

x.addDom(120, 160);

which represents a variable X with the domain 1..100 ∨ 120..160.

FDVs can be defined without specifying their identifiers. In this case, a system will use an identifier that starts with “_” followed by a generated unique sequential number of this variable, for example “_123”. This is illustrated by the following code snippet.

IntVar x = new IntVar(store, 1, 100);

FDVs can be printed using Java primitives since the method toString() is redefined for class Variable. The following code snippet will first create a variable with the domain containing values 1, 2, 14, 15, and 16.

IntVar x = new IntVar(store, "x", 1, 2);

x.addDom(14, 16);

System.out.println(x);

The last line of code prints a variable, which produces the following output.

X::{1..2, 14..16}

One special variable class is a BooleanVariable. They have been added to JaCoP as they can be handled more efficiently than FDVs with multiple elements in their domain. They can be used as any other variable. However, some constraints may require BooleanVariables as parameters. An example of Boolean variable definition is shown below.

BooleanVar bv = new BooleanVar(s, "bv");

In the previous section, we have defined FDVs with domains without considering domain representation. JaCoP default domain (cllaed IntervalDomain) is represented as an ordered list of intervals. Each interval is represented by a pair of integers denoting the minimal and the maximal value. This representation makes it possible to define all possible finite domains of integers but it is not always computationally efficient. For some problems other representations might be more computationally efficient. Therefore, JaCoP also offers domain that is restricted to represent only one interval with its minimal and maximal value. This domain is called BoundDomain and can be used by a finite domain variable in a same way as interval domain. The only difference is that any attempt to remove values from inside the interval of this domain will have no effect.

The following code creates variable v with bound domain 1..10.

IntVar v = new IntVar(s, "v", new BoundDomain(1, 10) );

In JaCoP, there are three major types of constraints:

primitive constraints,

global constraints, and

decomposable constraints.

Primitive constraints and global constraints can be imposed using impose method, while decomposable constraints are imposed using imposeDecomposition method. An example that imposes a primitive constraint XneqY is defined below. Again, in order to impose a constraint a store object must be available.

store.impose( new XeqY(x1, x2));

Alternatively, one can define first a constraint and then impose it, as shown below.

PrimitiveConstraint c = new XeqY(x1, x2);

c.impose(store);

Both methods are equivalent.

The methods impose(constraint) and constraint.impose(store) often create additional data structures within the constraint store as well as constraint itself. Do note that constraint imposition does not involve checking if the constraint is consistent. Both methods of constraint imposition does not check whether the store remains consistent. If checking consistency is needed, the method imposeWithConsistency(constraint) should be used instead. This method throws FailException if the store is inconsistent. Note, that similar functionality can be achieved by calling the procedure store.consistency() explicitly (see section 6).

Constraints can have another constraints as their arguments. For example, reified constraints of the form X = Y ⇔ B can be defined in JaCoP as follows.

IntVar x = new IntVar(store, "X", 1, 100);

IntVar y = new IntVar(store, "Y", 1, 100);

IntVar b = new IntVar(store, "B", 0, 1);

store.impose( new Reified( new XeqY(x, y), b));

In a similar way disjunctive constraints can be imposed. For example, the disjunction of three constraints can be defined as follows.

PrimitiveConstraint[] c = {c1, c2, c3};

store.impose( new Or(c) );

or

ArrayList<PrimitiveConstraint> c =

new ArrayList<PrimitiveConstraint>();

c.add(c1); c.add(c2); c.add(c3);

store.impose( new Or(c) );

Note, that disjunction and other similar constraints accept only primitive constraints as parameters.

After specification of the model consisting of variables and constraints, a search for a solution can be started. JaCoP offers a number of methods for doing this. It makes it possible to search for a single solution or to try to find a solution which minimizes/maximizes given cost function. This is achieved by using the depth-first-search together with constraint consistency enforcement.

The consistency check of all imposed constrains is achieved calling the following method from class Store.

boolean result = store.consistency();

When the procedure returns false then the store is in inconsistent state and no solution exists. The result true only indicates that inconsistency cannot be found. In other words, since the finite domain solver is not complete it does not automatically mean that the store is consistent.

To find a single solution the DepthFirstSearch method can be used. Since the search method is used both for finite domain variables and set variables it is recommended to specify the type of variables that are used in search. For finite domain variables, this type is usually <IntVar>. It is possible to not specify these information but it will generate compilation warnings if compilation option -Xlint:unchecked is used. A simple use of this method is shown below.

IntVar[] vars;

...

Search<IntVar> label = new DepthFirstSearch<IntVar>();

SelectChoicePoint<IntVar> select =

new InputOrderSelect<IntVar>(store,

vars, new IndomainMin<IntVar>());

boolean result = label.labeling(store, select);

The depth-first-search method requires the following information:

how to assign values for each FDV from its domain; this is defined by IndomainMin class that starts assignments from the minimal value in the domain first and later assigns successive values.

how to select FDV for an assignment from the array of FDVs (vars); this is decided explicitly here by InputOrderSelect class that selects FDVs using the specified order present in vars.

how to perform labeling; this is specified by DepthFirstSearch class that is an ordinary depth-first-search.

Different classes can be used to implement SelectChoicePoint interface. They are summarized in Appendix B. The following example uses SimpleSelect that selects variables using the size of their domains, i.e., variable with the smallest domain is selected first.

IntVar[] vars;

...

Search<IntVar> label = new DepthFirstSearch<IntVar>();

SelectChoicePoint<IntVar> select =

new SimpleSelect<IntVar>(vars,

new SmallestDomain<IntVar>(),

new IndomainMin<IntVar>());

boolean result = label.labeling(store, select);

In some situations it is better to group FDVs and assign the values to them one after the other. JaCoP supports this by another variable selection method called SimpleMatrixSelect. An example of its use is shown below. This choice point selection heuristic works on two-dimensional lists of FDVs.

IntVar[][] varArray;

...

Search<IntVar> label = new DepthFirstSearch<IntVar>();

SelectChoicePoint<IntVar> select =

new SimpleMatrixSelect<IntVar>(

varArray,

new SmallestMax<IntVar>(),

new IndomainMin<IntVar>());

boolean result = label.labeling(store, select);

The optimization requires specification of a cost function. The cost function is defined by a FDV that, with the help of attached (imposed) constraints, gets correct cost value. A typical minimization for defined constraints and a cost FDV is specified below.

IntVar[] vars;

IntVar cost;

...

Search<IntVar> label = new DepthFirstSearch<IntVar>();

SelectChoicePoint<IntVar> select = new SimpleSelect<IntVar>(vars,

new SmallestDomain<IntVar>(),

new IndomainMin<IntVar>());

boolean result = label.labeling(store, select, cost);

JaCoP offers number of different search heuristics based on depth-first-search. For example, credit search and limited discrepancy search. They are implemented using plug-in listeners that modify the standard depth-first-serch. For more details, see section 6.

All constraints must be posed with impose method when domains of all their variables are fully defined.

JaCoP offers a set of primitive constraints that include basic arithmetic operations (+,-,*,∕) as well as basic relations (=,≠,<,≤,>,≥). Subtraction is not provided explicitly, but since constraints define relations between variables, it canbe defined using addition. For detailed list of primitive constraint see appendix A.1.

Primitive constraints can be used as arguments in logical and conditional constraints.

Logical and conditional constraints use primitive constraints as arguments. JaCoP allows also specification of the reified, half-reified (implication) and if-then-elese (IfThen, IfThenElse, Conditional) constraints. For detailed list of these constraints see appendix A.4.

Alldifferent constraint ensures that all FDVs from a given list have different values assigned. This constraint uses a simple consistency technique that removes a value, which is assigned to a given FDV from the domains of the other FDVs.

For example, a constraint

IntVar a = new IntVar(store, "a", 1, 3);

IntVar b = new IntVar(store, "b", 1, 3);

IntVar c = new IntVar(store, "c", 1, 3);

IntVar[] v = {a, b, c};

Constraint ctr = new Alldifferent(v);

store.impose(ctr);

enforces that the FDVs a, b, and c have different values.

Alldifferent constraint is provided as three different implementations. Constraint Alldifferent uses a simple implementation described above, i.e., if the domain of a finite domain variable gets assigned a value, the propagation algorithm will remove this value from the other variables. Constraint Alldiff implements this basic pruning method and, in addition, bounds consistency [16]. Finally, constraint Alldistinct implements a generalized arc consistency as proposed by Régin [20].

The example below illustrates the difference in constraints pruning power for Alldifferent and Alldiff constraints. Assume the following variables:

IntVar a = new IntVar(store, "a", 2, 3);

IntVar b = new IntVar(store, "b", 2, 3);

IntVar c = new IntVar(store, "c", 1, 3);

IntVar[] v = {a, b, c};

The constraints will produce the following results after consistency enforcement.

store.impose( new Alldifferent(v) );

a :: {2..3}, b :: {2..3}, c :: {1..3}

and

store.impose( new Alldiff(v) );

a :: {2..3}, b :: {2..3}, c = 1

Alldistinct constraint will prune domains of variables a, b and c in the same way as Alldiff constraints but, in addition, it can remove single inconsistent values as illustrated below. Assume the following domains for a, b and c.

a :: {1,3}, b :: {1,3}, c :: {1..3}

The constraints will produce the following results after consistency enforcement.

store.impose( new Alldistinct(v) );

a :: {1,3}, b :: {1,3}, c = 2

Circuit constraint tries to enforce that FDVs which represent a directed graph will create a Hamiltonian circuit. The graph is represented by the FDV domains in the following way. Nodes of the graph are numbered from 1 to N. Each position in the list defines a node number. Each FDV domain represents a direct successors of this node. For example, if FDV x at position 2 in the list has domain 1, 3, 4 then nodes 1, 3 and 4 are successors of node x. Finally, if the i’th FDV of the list has value j then there is an arc from i to j.

For example, the constraint

IntVar a = new IntVar(store, "a", 1, 3);

IntVar b = new IntVar(store, "b", 1, 3);

IntVar c = new IntVar(store, "c", 1, 3);

IntVar[] v = {a, b, c};

Constraint ctr = new Circuit(store, v);

store.impose(ctr);

can find a Hamiltonian circuit [2, 3, 1], meaning that node 1 is connected to 2, 2 to 3 and finally, 3 to 1.

Subcircuit constraint tries to enforce that FDVs which represent a directed graph will create a subcircuit. The graph is represented by the FDV domains in the same way as for the Circuit constraint. The result defines a subcircuit represented by values assigned to the FDVs. Nodes that do not belong to a subcircuit have the value pointing to itself.

For example, the constraint

IntVar a = new IntVar(store, "a", 1, 3);

IntVar b = new IntVar(store, "b", 1, 3);

IntVar c = new IntVar(store, "c", 1, 3);

IntVar[] v = {a, b, c};

Constraint ctr = new Subcircuit(v);

store.impose(ctr);

can find a circuit [2, 1, 3], meaning that node 1 is connected to 2 and 2 to 1 while node 3 is not in the subcircuit and has a value 3.

All solutions to this constraint are [1, 2, 3], [1, 3, 2], [2, 1, 3], [2, 3, 1], [3, 1, 2], [3, 2, 1], where the first solution represents a solution with no subsircuits and subseqent solutions define found subcrircuits.

Element constraint of the form Element(I, List, V ) enforces a finite relation between I and V , V = List[I]. The vector of values, List, defines this finite relation. For example, the constraint

IntVar i = new IntVar(store, "i", 1, 3);

IntVar v = new IntVar(store, "v", 1, 50);

int[] el = {3, 44, 10};

Constraint ctr = new Element(i, el, v);

store.impose(ctr);

imposes the relation on the index variable i :: {1..3}, and the value variable v1 . The initial domain of v will be pruned to v :: {3,10,44} after the intial consistency execution of this Element constraint. The change of one FDV propagates to another FDV. Imposing the constraint V < 44 results in change of I :: {1,3}.

This constraint can be used, for example, to define discrete cost functions of one variable or a relation between task delay and its implementation resources. The constraint is simply implemented as a program which finds values allowed both for the first FDV and third FDV and updating them respectively.

There are also two other versions of element constraint that implement a version of bounds consistency, ElementIntegerFast and ElementVariableFast. This constraints make faster computations of consistency methods but may not remove all inconsistent values.

Distance constraint computes the absolute value between two FDVs. The result is another FDV, i.e., d = |x - y|.

The example below

IntVar a = new IntVar(store, "a", 1, 10);

IntVar b = new IntVar(store, "b", 2, 4);

IntVar c = new IntVar(store, "c", 0, 2);

Constraint ctr = new Distance(a, b, c);

store.impose(ctr);

produces result a::1..6, b::2..4, c::0..2 since a must be pruned to have distance lower than three.

Cumulative constraint was originally introduced to specify the requirements on tasks which needed to be scheduled on a number of resources. It expresses the fact that at any time instant the total use of these resources for the tasks does not exceed a given limit. It has, in our implementation, four parameters: a list of tasks’ starts Oi, a list of tasks’ durations Di, a list of amount of resources ARi required by each task, and the upper limit of the amount of used resources Limit. All parameters can be either domain variables or integers. The constraint is specified as follows.

IntVar[] o = {O1, ..., On};

IntVar[] d = {D1, ..., Dn};

IntVar[] r = {AR1, ..., ARn};

IntVar Limit = new IntVar(Store, "limit", 0, 10);

Constraint ctr = Cumulative(o, d, r, Limit)

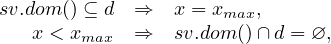

Formally, it enforces the following constraint:

![∑

∀t ∈ [1 m≤iin≤n(Oi),1 m≤aix≤n(Oi + Di)] : ARk ≤ Limit

k:Ok≤t≤Ok+Dk](guideJaCoP0x.png) | (3.1) |

In the above formulation, min and max stand for the minimum and the maximum values in the domain of each FDV respectively. The constraints ensures that at each time point, t, between the start of the first task (task selected by min(Oi)) and the end of the last task (task selected by max(Oi + Di)) the cumulative resource use by all tasks, k, running at this time point is not greater than the available resource limit. This is shown in Fig 3.1.

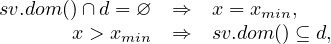

JaCoP additionally requires that there exist at least one time point where the number of used resources is equal to Limit, i.e.

![∑

∃t ∈ [ min (Oi), max (Oi + Di)] : ARk = Limit

1≤i≤n 1≤i≤n k:Ok≤t≤Ok+Dk](guideJaCoP1x.png) | (3.2) |

There is a version of Cumulative that only requires that the sum of ARk ≤ Limit and it is defined with addtional flag as follows. In this constraint, first two flags specify to make propagation based on edge finding algorithm and profile while the last flag informs the solver to not apply reuirement 3.2.

import org.jacop.constraints.Cumulative;

Constraint ctr = Cumulative(o, d, r, Limit, true, true, false)

There exist also a newer implementation of cumulative constraints based on methods presented in [27, 26, 23]. There are three following cumulative constraints.

CumulativeBasic- does time-tabling propagation,

Cumulative- does time-tabling and edge finding propagation, and

CumulativeUnary- a specialized version for unary resources and it does in addition to time-tabling and edge finding (specialized for unary resource) propagations, not-first-not-last, detectable propagations.

All propagations, except time-tabling, are done on binary trees and have the following complexities, where n is the number of tasks and k is the number of distinct resource values for tasks. Edge finding O(k ⋅ n ⋅ logn), not-first-not-last, detectable and overload O(n ⋅ logn).

An example of using this implementation for Cumulative constraint is as follows.

import org.jacop.constraints.cumulative.*;

Constraint ctr = Cumulative(o, d, r, Limit)

Diffn constraint takes as an argument a list of 2-dimensional rectangles and assures that for each pair i,j (i≠j) of such rectangles, there exists at least one dimension k where i is after j or j is after i, i.e., the rectangles do not overlap. The rectangle is defined by a 4-tuple [O1, O2, L1, L2], where Oi and Li are respectively called the origin and the length in i-th dimension. The diffn constraint is specified as follows.

import org.jacop.constraints.diffn.*;

IntVar[][] rectangles = {{O11, O12, L11, L12}, ...,

{On1, On2, Ln1, Ln2}};

Constraint ctr = new Diffn(rectangles)

Alternatively, the constraint can be specified using four lists of origins and lengths of rectangles.

import org.jacop.constraints.diffn.*;

IntVar[][] rectangles = {{O11, O21, ..., On1},

{O12, O22, ..., On2},

{L11, L21, ..., Ln1},

{L12, L22, ..., Ln2}};

Constraint ctr = new Diffn(rectangles)

The special case for the constraint is when one length becomes zero. In this case, the constraint places zero-length rectangles between other rectangles, that is the zero-length rectangle cannot be placed anywhere. To make possible, the so called, non-strict placement, the constraint has possibility to use the last Boolean parameter equal false, meaning non-strict placement.

The Diffn constraint can be used to express requirements for packing and placement problems as well as define constraints for scheduling and resource assignment.

This constraint uses two different propagators. The first one tries to narrow Oi and Li FDV’s of each rectangle so that rectangles do not overlap. The second one is similar to the cumulative profile propagator but it is applied in both directions (in 2-dimensional space) for all rectangles. In addition, the constraint checks whether there is enough space to place all rectangles in the limits defined by each rectangle FDV’s domains.

Simplified version of the constraint that logically enforces no overlapping conditions on rectangles is available as well and is called Nooverlap. This constraint has weaker pruning but is faster for simple problems. DiffnDecomposed is the decomposed version of the constraint that combines no overlapping constraint with two cumulative constraints (in direction x ands y).

These constraints enforce that a given FDV is minimal or maximal of all variables present on a defined list of FDVs.

For example, a constraint

IntVar a = new IntVar(Store, "a", 1, 3);

IntVar b = new IntVar(Store, "b", 1, 3);

IntVar c = new IntVar(Store, "c", 1, 3);

IntVar min = new IntVar(Store, "min", 1, 3);

IntVar[] v = {a, b, c};

Constraint ctr = new Min(v, min);

Store.impose(ctr);

will constraint FDV min to a minimal value of variables a, b and c.

NOTE! The position for parameters in constraints Min and Max is changed comparing to previous versions (i.e., parameters are swapped).

The related constraints ArgMinArgMax find an index of the minimal and maximal element respectively. In the standard version the first position of the minimal or maximal element is constrained. Indexing starts from 1 if the parameter offset is not used.

These constraints enforce a (wighted) sum of elements of FDVs’ vector

SumInt and SumBool constraint is defined as follows x1 + x2 +  + xn ℜ sum,

where all xi and sum are FDVs and ℜ∈{<,≤,>,≥,=,≠}. SumBool has additional

requirement that all FDVs must have domain 0..1.

+ xn ℜ sum,

where all xi and sum are FDVs and ℜ∈{<,≤,>,≥,=,≠}. SumBool has additional

requirement that all FDVs must have domain 0..1.

The weighted sum is provided by LinearInt constraint defined as follows

w1 ⋅x1 + w2 ⋅x2 +  + wn ⋅xn ℜ sum, where all wi and sum are integer constants, xi

are FDVs and ℜ∈{<,≤,>,≥,=,≠}. There exist also a constructor for LinearInt that

makes it possible to give sum as a FDV. In this case the constraint is transformed to its

normal form where FDV is added to the list of variables with weight -1 and the result is 0.

To define, for example, constraint w1 ⋅x1 + w2 ⋅x2 +

+ wn ⋅xn ℜ sum, where all wi and sum are integer constants, xi

are FDVs and ℜ∈{<,≤,>,≥,=,≠}. There exist also a constructor for LinearInt that

makes it possible to give sum as a FDV. In this case the constraint is transformed to its

normal form where FDV is added to the list of variables with weight -1 and the result is 0.

To define, for example, constraint w1 ⋅x1 + w2 ⋅x2 +  + wn ⋅xn = s where s is FDV, the

constraint will be internally transformed to w1 ⋅ x1 + w2 ⋅ x2 +

+ wn ⋅xn = s where s is FDV, the

constraint will be internally transformed to w1 ⋅ x1 + w2 ⋅ x2 +  + (-1) ⋅ s = 0.

+ (-1) ⋅ s = 0.

For example, the constraint

IntVar a = new IntVar(Store, "a", 1, 3);

IntVar b = new IntVar(Store, "b", 1, 3);

IntVar c = new IntVar(Store, "c", 1, 3);

IntVar sum = new IntVar(Store, "sum", 1, 10);

IntVar[] v = {a, b, c};

Constraint ctr = new SumInt(v, "==", sum);

Store.impose(ctr);

will constraint FDV sum to the sum of a, b and c.

Constraints SumInt, SumBool and LinearInt are primitive constraints. It means that they can be an argument in other constraints, such as Reified, conditional and logical constraints.

For example, the linear constraint can be used in reification, as follows.

Store store = new Store();

IntVar a = new IntVar(Store, "a", 1, 3);

IntVar b = new IntVar(Store, "b", 1, 3);

IntVar c = new IntVar(Store, "c", 1, 3);

IntVar[] v = {a, b, c};

PrimitiveConstraint ctr =

new LinearInt(store, v, new int[] {2, 3, -1}, "<", 10);

BooleanVar b = new BooleanVar(store);

store.impose(new Reified(ctr), b);

Primitive constraints LinearInt and SumInt implement pruning methods for large linear constraints presented in [10]. There exist also constraint LinearIntDom that implements domain consistency for linear constraint. This constraint has exponential complexity in worst case and need to be used carefully. This constraint can be useful when computing an index from two dimensional array to a vector for element constraint.

Constraints Sum, SumWeight and SumWeightDom extend class Constraint and are deprecated.

There exist several implementation of these constraints. Table and SimpleTable constraints use compact-table algorithms proposed in [5]. ExtensionalSupport and ExtensionalConflict are distinguished by their suffixes. The base implementation with suffix VA tries to balance the usage of memory versus time efficiency. ExtensionalSupportMDD uses multi-valued decision diagram (MDD) as internal representation and algorithms proposed in [3] while ExtensionalSupportSTR uses simple tabular reduction (STR) and the method proposed in [15].

Extensional support, extensional conflict and table constraints define relations between n FDVs. All constraints are defined by a vector of n FDVs and a vector of n-tuples of integer values. The n-tuples define the relation between variables defined in the first vector.

The tuples of extensional support and table constraint define all combinations of values that can be assigned to variables specified in the vector of FDVs. Extensional conflict, on the other hand specifies the combinations of values that are not allowed in any assignment to variables.

The example below specifies the XOR logical relation of the form a ⊕ b = c using both constraints.

IntVar a = new IntVar(store, "a", 0, 1);

IntVar b = new IntVar(store, "b", 0, 1);

IntVar c = new IntVar(store, "c", 0, 1);

IntVar[] v = {a, b, c};

// version with ExtensionalSupport constraint

store.impose(new ExtensionalSupportVA(v,

new int[][] {{0, 0, 0},

{0, 1, 1},

{1, 0, 1},

{1, 1, 0}}));

// version with ExtensionalConflict constraint

store.impose(new ExtensionalConflictVA(v,

new int[][] {{0, 0, 1},

{0, 1, 0},

{1, 0, 0},

{1, 1, 1}}));

Assignment constraint implements the following relation between two vectors of FDVs Xi = j ∧ Y j = i.

For example, the constraint

IntVar[] x = new IntVar[4];

IntVar[] y = new IntVar[4];

for (int i=0; i<4; i++) {

x[i] = new IntVar(store, "x"+i, 0, 3);

y[i] = new IntVar(store, "y"+i, 0, 3);

}

store.impose(new Assignment(x, y));

produces the following assignment to FDVs when x[1] = 3.

x0::{0..2}

x1=3

x2::{0..2}

x3::{0..2}

y0::{0, 2..3}

y1::{0, 2..3}

y2::{0, 2..3}

y3=1

The constraint has possibility to define the minimal index for vectors of FDVs. Therefore constraint Assignment(x, y, 1) will index variables from 1 instead of default value 0.

Count constraint counts number of occurrences of value Val on the list List in FDV Var.

For example, the constraint

IntVar[] List = new IntVar[4];

List[0] = new IntVar(store, "List_"+0, 0, 1);

List[1] = new IntVar(store, "List_"+1, 0, 2);

List[2] = new IntVar(store, "List_"+2, 2, 2);

List[3] = new IntVar(store, "List_"+3, 3, 4);

IntVar Var = new IntVar(store, "Var", 0, 4):

store.impose(new Count(List, Var, 2));

produces Var :: {1..2}.

If variable Var will be constrained to 1 then JaCoP will produce produces List_1 :: {0..1} .

NOTE! The position for parameters in constraint Count is changed comparing to previous versions.

There are several specialized versions of Count constraints defined as follows.

CountBounds(IntVar[] list, int value, int lb, int ub)

CountValues(IntVar[] list, IntVar[] counter, int[] values)

CountVar(IntVar[] list, IntVar counter, IntVar value)

CountValuesBounds(IntVar[] list, int[] lb, int[] ub, int[] values)

The two primitive constraints AtLeast and AtLast enforces number of occurrences of a give value on a list of variables.

AtLeast constraint requires that the number of values must be at least equal a given count value and constraint AtMost requires that the number of values must be at most equal a given count value. Constraints are primitive and can be used as parameters to other constraints, such as logical or reified.

Values constraint takes as arguments a list of variables and a counting variable. It counts a number of different values on the list of variables in the counting variable. For example, consider the following code.

IntVar x0 = new IntVar(store, "x0", 1,1);

IntVar x1 = new IntVar(store, "x1", 1,1);

IntVar x2 = new IntVar(store, "x2", 3,3);

IntVar x3 = new IntVar(store, "x3", 1,3);

IntVar x4 = new IntVar(store, "x4", 1,1);

IntVar[] val = {x0, x1, x2, x3, x4};

IntVar count = new IntVar(store, "count", 2, 2);

store.impose( new Values(count, val) );

Constraint Values will remove value 2 from variable x3 to assure that are only two different values (1 and 3) on the list of variables as specified by variable count.

NOTE! The position for parameters in constraint Values is changed comparing to previous versions.

Global cardinality constraint (GCC) is defined using two lists of variables. The first list is the value list and the second list is the counter list. The constraint counts number of occurrences of different values in the variables from the value list. The counter list is used to counter occurrences of a specific value. It can also specify the number of allowed occurrences of a specific value on the value list. Variables on the counter list are assigned to values as follows. The lowest value in the domain of all variables from the value list is counted by the first variable on the counters list. The next value (+1) is counted by the next variable and so on.

For example, the following code counts number of values -1, 0, 1 and 2 on value list x. The values are counted using counter list y using the following mapping. -1 is counted in y0, 0 is counted in y1, 1 is counted in y2 and 2 is counted in y3.

IntVar x0 = new IntVar(store, "x0", -1, 2);

IntVar x1 = new IntVar(store, "x1", 0, 2);

IntVar x2 = new IntVar(store, "x2", 0, 2);

IntVar[] x = {x0, x1, x2};

IntVar y0 = new IntVar(store, "y0", 1, 1);

IntVar y1 = new IntVar(store, "y1", 0, 1);

IntVar y2 = new IntVar(store, "y2", 0, 1);

IntVar y3 = new IntVar(store, "y3", 1, 2);

IntVar[] y = {y0, y1, y2, y3};

store.impose(new GCC(x, y));

The GCC constraint will allow only the following five combinations of x variables [x0=-1, x1=0, x2=2], [x0=-1, x1=1, x2=2], [x0=-1, x1=2, x2=0], [x0=-1, x1=2, x2=1], and [x0=-1, x1=2, x2=2].

There exist two other versions of global cardinality constraints, CountValues and CountValuesBounds. These constraints defines values to be counted explicitly as a parameter of the constraint. Not all values need to be counted. For example, the following constraint counts values 0, 1 and 2 only.

IntVar x0 = new IntVar(store, "x0", -1, 2);

IntVar x1 = new IntVar(store, "x1", 0, 2);

IntVar x2 = new IntVar(store, "x2", 0, 2);

IntVar[] x = {x0, x1, x2};

IntVar y1 = new IntVar(store, "y1", 1, 1);

IntVar y2 = new IntVar(store, "y2", 0, 1);

IntVar y3 = new IntVar(store, "y3", 1, 1);

IntVar[] counter = {y1, y2, y3};

int[] values = {0, 1, 2};

store.impose(new CountValues(x, counter, values));

It generates the following valid solutions.

[x0=-1, x1=0, x2=2]

[x0=-1, x1=2, x2=0]

[x0=0, x1=1, x2=2]

[x0=0, x1=2, x2=1]

[x0=1, x1=0, x2=2]

[x0=1, x1=2, x2=0]

[x0=2, x1=0, x2=1]

[x0=2, x1=1, x2=0]

Constraint CountValuesBounds makes it possible to defines counters as lower and upper bounds. The example above can be rewritten to the following form.

int[] lb = {1, 0, 1};

int[] ub = {1, 1, 1};

store.impose(new CountValuesBounds(x, lb, ub, values));

Among constraint is specified using three parameters. The first parameter is the value list, the second one is a set of values specified as IntervalDomain, and finally the third parameter, the counter, counts the number of variables from the value list that get assigned values from the set of values. The constraint assures that exactly the number of variables defined by count variable is equal to one value from the set of values.

The following example constraints that either 2 or 4 variables from value list numbers are equal either 1 or 3. There exist 2880 such assignments.

IntVar numbers[] = new IntVar[5];

for (int i = 0; i < numbers.length; i++)

numbers[i] = new IntVar(store, "n" + i, 0,5);

IntVar count = new IntVar(store, "count", 2,2);

count.addDom(4,4);

IntervalDomain val = new IntervalDomain(1,1);

val.addDom(3,3);

store.impose(new Among(numbers, val, count));

AmongVar constraint is a generalization of Among constraint. Instead of specifying a set of values it uses a list of variables as the second parameter. It counts how many variables from the value list are equal to at least one variable from list of variables (second parameter).

The example below specifies the same conditions as the Among constraint in the above example.

IntVar numbers[] = new IntVar[5];

for (int i = 0; i < numbers.length; i++)

numbers[i] = new IntVar(store, "n" + i, 0,5);

IntVar count = new IntVar(store, "count", 2,2);

count.addDom(4,4);

IntVar[] values = new IntVar[2];

values[0] = new IntVar(store, 1,1);

values[1] = new IntVar(store, 3,3);

store.impose(new AmongVar(numbers, values, count));

Regular constraint accepts only the assignment to variables that are accepted by an automaton. The automaton is specified as the first parameter of this constraint and a list of variable is the second parameter. This constraint implements a polynomial algorithm to establish GAC.

The automaton is specified by its states and transitions. There are three types of states: initial state, intermediate states, and final states. Each transition has associated domain containing all values which can trigger this transition. Values assigned to transitions must be present in the domains of assigned constraint variable. Each value may cause firing of the related transition. The automaton eventually reaches a final state after taking the last transition as specified by the value of the last variable.

Each state can be assigned a level by topologically sorting states of the automaton. The variables from the list (second parameters) are assigned to these levels. All states at the same level are assigned the same variable (see Figure 3.2). If necessary, the automaton, containing cycles, is unrolled to match a list of variables. Each transitions has assigned values that are allowed for a variable when the transition in the automaton is selected. This is specified as the interval domain.

The example below implements the automaton from Figure 3.2. This automaton defines condition for three variables to be different values 0, 1 or 2.

IntVar[] var = new IntVar[3];

var[0] = new IntVar(store, "v"+0, 0, 2);

var[1] = new IntVar(store, "v"+1, 0, 2);

var[2] = new IntVar(store, "v"+2, 0, 2);

FSM g = new FSM();

FSMState[] s = new FSMState[8];

for (int i=0; i<s.length; i++) {

s[i] = new FSMState();

g.allStates.add(s[i]);

}

g.initState = s[0];

g.finalStates.add(s[7]);

s[0].transitions.add(new FSMTransition(new IntervalDomain(0, 0),

s[1]));

s[0].transitions.add(new FSMTransition(new IntervalDomain(1, 1),

s[2]));

s[0].transitions.add(new FSMTransition(new IntervalDomain(2, 2),

s[3]));

s[1].transitions.add(new FSMTransition(new IntervalDomain(1, 1),

s[4]));

s[1].transitions.add(new FSMTransition(new IntervalDomain(2,2),

s[5]));

s[2].transitions.add(new FSMTransition(new IntervalDomain(0, 0),

s[4]));

s[2].transitions.add(new FSMTransition(new IntervalDomain(2,2),

s[6]));

s[3].transitions.add(new FSMTransition(new IntervalDomain(0, 0),

s[5]));

s[3].transitions.add(new FSMTransition(new IntervalDomain(1, 1),

s[6]));

s[4].transitions.add(new FSMTransition(new IntervalDomain(2, 2),

s[7]));

s[5].transitions.add(new FSMTransition(new IntervalDomain(1, 1),

s[7]));

s[6].transitions.add(new FSMTransition(new IntervalDomain(0, 0),

s[7]));

store.impose(new Regular(g, var));

Knapsack constraint specifies knapsack problem. This implementation2 was inspired by the paper [12] and published in [17]. The major extensions of that paper are the following. The quantity variables do not have to be binary. The profit and capacity of the knapsacks do not have to be integers. In both cases, the constraint accepts any finite domain variable.

The constraint specify number of categories of items. Each item has a given weight and profit. Both weight and profit are specified as positive integers. The problem is to select a number of items in each category to satisfy capacity constraint, i.e. the total weight must be in the limits specified by the capacity variable. Each such solution is then characterized by a given profit. It is defined in JaCoP as follows.

Knapsack(int[] profits, int[] weights, IntVar[] quantity,

IntVar knapsackCapacity, IntVar knapsackProfit)

It can be formalize using the following constraints.

![∑

weights[i]⋅quantity[i] = knapsackCapacity

∑ i

profits[i]⋅quantity[i] = knapsackP rofit

i

∀i : weights[i] ∈ ℤ >0 ∧ ∀i : profits[i] ∈ ℤ >0](guideJaCoP6x.png) | (3.3) (3.4) (3.5) |

Geost is a geometrical constraint, which means that it applies to geometrical objects. It models placement problems under geometrical constraints, such as non overlapping constraints. Geost consistency algorithm was proposed by Beldiceanu et al [21]. The implementation of Geost in JaCoP is a result of a master thesis by Marc-Olivier Fleury.

In order to describe the constraint, we will introduce several definitions and relate them to JaCoP implementation.

Definition 1 A shifted box b is a pair (b.t[],b.l[]) of vectors of integers of length k, where k is the number of dimensions of the problem. The origin of the box relative to a given reference is b.t[], and b.l[] contains the length of the box, for each dimension.

Shifted box is defined in JaCoP using class DBox. For example, a two dimensional shifted box starting at coordinates (0,0) and having length 2 in first dimension and 1 in second direction is specified as follows.

DBox sbox = new DBox(new int[] {0,0}, new int[] {2,1});

Definition 2 A shape s is a set of shifted boxes. It has a unique identifier s.sid.

In JaCoP, we can define n shapes as collection of shifted boxes. Shape identifiers start at 0 and must be assigned consecutive integers. Therefore we have shapes with identifiers in interval 0..n-1. The following JaCoP example defines a shape with identifier 0, consisting of three sboxs, depicted in Figure 3.4.

ArrayList<Shape> shapes = new ArrayList<Shape>();

ArrayList<DBox> shape1 = new ArrayList<DBox>();

shape1.add(new DBox(new int[] {0,0}, new int[] {2,1}));

shape1.add(new DBox(new int[] {0,1}, new int[] {1,2}));

shape1.add(new DBox(new int[] {1,2}, new int[] {3,1}));

shapes.add( new Shape(0, shape1) );

Definition 3 An object o is a tuple (o.id,o.sid,o.x[], o.start,o.duration,o.end). o.id is a unique identifier, o.sid is a variable that stores all shapes that o can take. o.x[] is a k-dimensional vector of variables which represent the origin of o. o.start, o.duration and o.end define the interval of time in which o is present.

An object in JaCoP is defined by class GeostObject. It specifies basically all parameters of an object. An example below specifies object 0 that can take shapes 0, 1, 2 and 3. The object can be placed using coordinates (X_o1, Y_o1). The object is present during time 2 to 14.

ArrayList<GeostObject> objects = new ArrayList<GeostObject>();

IntVar X_o1 = new IntVar(store, "x1", 0, 5);

IntVar Y_o1 = new IntVar(store, "y1", 0, 5);

IntVar[] coords_o1 = {X_o1, Y_o1};

IntVar shape_o1 = new IntVar(store, "shape_o1", 0, 3);

IntVar start_o1 = new IntVar(store, "start_o1", 2, 2);

IntVar duration_o1 = new IntVar(store, "duration_o1", 12, 12);

IntVar end_o1 = new IntVar(store, "end_o1", 14, 14);

GeostObject o1 = new GeostObject(0, coords_o1, shape_o1,

start_o1, duration_o1, end_o1);

objects.add(o1);

Note that since object shapes are defined in terms of collections of shifted boxes, and since shifted boxes have a fixed size, Geost is not suited to solve problems in which object sizes can vary. Polymorphism provides some flexibility (shape variable having multiple values in their domain), but it is essentially intended to allow the modeling of objects that can take a small amount of different shapes. Typically objects that can be rotated. The duration of an object can be useful in cases where objects have variable sizes, because it is a variable, which means that some more flexibility is available. However, this feature is only available for one dimension. These restrictions are design choices made by the authors of Geost, probably because it fits well their primary field of application, which consists in packing goods in trucks. Using fixed sized shapes is also useful because it allows more deductions concerning possible placements.

When all shapes and objects are defined it is possible to specify geometrical constraints that must be fulfilled when placing these objects. Implemented geometrical constraints include in-area and non-overlapping constraints. In-area constraint enforces that objects have to lie inside a given k-dimensional sbox. Non-overlapping constraints require that no two objects can overlap.

The code below specifies two geometrical constraint, non-overlapping and in-area. They are specified by classes NonOverlapping and InArea. It must be noted that non-overlapping constraint in the code below specifies that all objects must not overlap in its two dimensions and time dimension (the time dimension is implemented as one additional dimension and therefore we specify dimensions 0, 1 and 2). In-area constraint requires that all object must be included in the sbox of dimensions 5x4.

ArrayList<ExternalConstraint> constraints =

new ArrayList<ExternalConstraint>();

int[] dimensions = {0, 1, 2};

NonOverlapping constraint1 =

new NonOverlapping(objects, dimensions);

constraints.add(constraint1);

InArea constraint2 = new InArea(

new DBox(new int[] {0,0}, new int[] {5,4}), null);

constraints.add(constraint2);

Finally, the Geost constraint is imposed using the following code.

store.impose( new Geost(objects, constraints, shapes) );

NetworkFlow constraint defines a minimum-cost network flow problem. An instance of this problem is defined on a directed graph by a tuple (N,A,l,u,c,b), where

N is the set of nodes,

A is the set of directed arcs,

l : A → ℤ≥0 is the lower capacity function on the arcs,

u : A → ℤ≥0 is the upper capacity function on the arcs,

c : A → ℤ is the flow cost-per-unit function on the arcs,

b : N → ℤ is the node mass balance function on the nodes.

A flow is a function x : A → ℤ≥0. The minimum-cost flow problem asks to find a flow that satisfies all arc capacity and node balance conditions, while minimizing total cost. It can be stated as follows:

| min | z(x) = ∑ (i,j)∈Acijxij | (3.6) |

| s.t. | lij ≤ xij ≤ uij ∀(i,j) ∈ A, | (3.7) |

| ∑ j:(i,j)∈Axij -∑ j:(j,i)∈Axji = bi ∀i ∈ N | (3.8) |

The network is built with NetworkBuilder class using node, defined by class org.jacop.net.Node and arcs. Each node is defined by its name and node mass balance b. For example, node A with balance 0 is defined using network net as follows.

NetworkBuilder net = new NetworkBuilder();

Node A = net.addNode("A", 0);

Source node producing flow of capacity 5 and sink node consuming flow of capacity 5 are defined using similar constructs but different value of node mass balance, as indicated below.

Node source = net.addNode("source", 5);

Node sink = net.addNode("sink", -5);

Arc of the network are defined always between two nodes. They define connection between given nodes, the lower and upper capacity values assigned to the arc (values l and u) as well as the flow cost-per-unit function on the arc (value c). This can be defined using different methods with either integers or finite domain variables. For example, an arc from source to node A with l = 0 and u = 5 and c = 3 can be defined as follows.

net.addArc(A, B, 3, 0, 5);

or

x = new IntVar(store, "source->A", 0, 5);

net.addArc(A, B, 3, x);

It can be noted, that cost-per-unit value can also be defined as a finite domain variable.

The constraint has also the flow cost, defined as z(x) in 3.6. It is defined as follows.

IntVar cost = new IntVar(store, "cost", 0, 1000);

net.setCostVariable(cost);

Note that the NetworkFlow only ensures that cost z(x) ≤ Zmax, where z(x) is the total cost of the flow (see equation (3.6)). In our code it is defined as variable cost.

The constraint is finally posed using the following method.

store.impose(new NetworkFlow(net));

For example, Figure 3.5 presents the code for network flow problem depicted in Figure 3.6. The minimal flow, found by the solver, is 10 that is indicated in the figure.

store = new Store();

IntVar[] x = new IntVar[8];

NetworkBuilder net = new NetworkBuilder();

Node source = net.addNode("source", 5);

Node sink = net.addNode("sink", -5);

Node A = net.addNode("A", 0);

Node B = net.addNode("B", 0);

Node C = net.addNode("C", 0);

Node D = net.addNode("D", 0);

x[0] = new IntVar(store, "x_0", 0, 5);

x[1] = new IntVar(store, "x_1", 0, 5);

net.addArc(source, A, 0, x[0]);

net.addArc(source, C, 0, x[1]);

x[2] = new IntVar(store, "a->b", 0, 5);

x[3] = new IntVar(store, "a->d", 0, 5);

x[4] = new IntVar(store, "c->b", 0, 5);

x[5] = new IntVar(store, "c->d", 0, 5);

net.addArc(A, B, 3, x[2]);

net.addArc(A, D, 2, x[3]);

net.addArc(C, B, 5, x[4]);

net.addArc(C, D, 6, x[5]);

x[6] = new IntVar(store, "x_6", 0, 5);

x[7] = new IntVar(store, "x_7", 0, 5);

net.addArc(B, sink, 0, x[6]);

net.addArc(D, sink, 0, x[7]);

IntVar cost = new IntVar(store, "cost", 0, 1000);

net.setCostVariable(cost);

store.impose(new NetworkFlow(net));

Network builder has a special attribute handlerList that makes it possible to specify structural rules connected to the network. Each structural rule must implement VarHandler interface, which allows network flow constraint to cooperate with the structural rule. An important, already implemented rule, is available in the class DomainStructure. It specifies, for each structural variable sv, a list of arcs that the structural rule influence. This structural rule makes it possible to enforce minimum or maximum amount of flow on a given arc depending on the relationship between the domain of variable sv and domain d, specified within a structural rule. The domain of variable sv and domain d do not intersect if and only if the flow on a given arc is minimal as specified by initial value x.min(), denoted as xmin. Moreover, the domain of varibale sv is contained within domain d if and only if the actual flow on a given arc is maximal as specified by initial value x.max(), denoted as xmax. It is enforced by the following rules.

| (3.9) |

| (3.10) |

For example, creation of structural rule for arc between node source and B will enforce that this arc will have maximal flow if variable s is zero and minimal flow otherwise. The rule works also in the other direction, i.e. if the flow will be maximal variable s=0 and if the flow will be minimal this variable will be 1.

Arc[] arcs = new Arc[1];

arcs[0] = net.addArc(source, B, 0, x[1]);

IntVar s = new IntVar(store, "s", 0, 1);

Domain[] domCond = new IntDomain[1];

domCond[0] = new IntervalDomain(0, 0);

net.handlerList.add(new DomainStructure(s,

Arrays.asList(domCond),

Arrays.asList(arcs)));

SoftAlldifferent constraint as well as SoftGCC constraint use DomainStructure rules to enforce flow from variable nodes to value nodes according the actual domain/value of variables represented by variable nodes. It is crucial functionality for the implementation of those soft global constraints.

Binpacking constraint defines a problem of packing bins with the specified items of a given size. Each bin has a defined load. The constraint is defined as follows.

Binpacking(IntVar[] item, IntVar[] load, int[] size)

where item means the bin number assigned to item at position i, load defines load of bin i as finite domain variable (min and max load) and size defines items i size.

It can be formalize using the following formulation.

![∑

∀i size[j] = load[i]

j whereitem[j]=i](guideJaCoP9x.png) | (3.11) |

The binpacking constraint implements methods and algorithms proposed in [24].

The LexOrder constraint enforces ascending lexicographic order between two vectors that can be of different size. The constraints makes it possible to enforce strict ascending lexicographic order, that is the fist vector must be always before the second one in the lexicographical order, or it can allow equality between the vectors. This is controlled by the last parameter of the constraint that is either true for strictly ascending lexicographic order or false otherwise. The default is non-strictly ascending lexicographic order when no second parameter is defined.

The following code specifies Lex constraint that holds when the strict ascending lexicographic order holds between two vectors of 0/1 varables.

int N = 2;

IntVar[] x = new IntVar[N];

for (int i = 0; i < x.length; i++)

x[i] = new IntVar(store, "x["+i+"]", 0, 1);

IntVar[] y = new IntVar[N];

for (int i = 0; i < y.length; i++)

y[i] = new IntVar(store, "y["+i+"]", 0, 1);

store.impose(new LexOrder(x, y, true));

The result is as follows.

[0, 0, 0], [0, 0, 1]

[0, 0, 0], [0, 1, 0]

[0, 0, 0], [0, 1, 1]

[0, 0, 0], [1, 0, 0]

[0, 0, 0], [1, 0, 1]

[0, 0, 0], [1, 1, 0]

[0, 0, 0], [1, 1, 1]

[0, 0, 1], [0, 1, 0]

[0, 0, 1], [0, 1, 1]

[0, 0, 1], [1, 0, 0]

[0, 0, 1], [1, 0, 1]

[0, 0, 1], [1, 1, 0]

[0, 0, 1], [1, 1, 1]

[0, 1, 0], [0, 1, 1]

[0, 1, 0], [1, 0, 0]

[0, 1, 0], [1, 0, 1]

[0, 1, 0], [1, 1, 0]

[0, 1, 0], [1, 1, 1]

[0, 1, 1], [1, 0, 0]

[0, 1, 1], [1, 0, 1]

[0, 1, 1], [1, 1, 0]

[0, 1, 1], [1, 1, 1]

[1, 0, 0], [1, 0, 1]

[1, 0, 0], [1, 1, 0]

[1, 0, 0], [1, 1, 1]

[1, 0, 1], [1, 1, 0]

[1, 0, 1], [1, 1, 1]

[1, 1, 0], [1, 1, 1]

The algorithm of this constraint is based on the algorithm presented in [8].

It defines value precedence constraint for integers. Value precedence is defined for integer value s, integer value t and an integer sequence x = [x0,...,xn-1] and means the following. If there exists j such that xj = t, then there must exist i < j such that xi = s. The algorithm is based on paper [14].

For example, the following code defines that 1 has to always proceed 4 in the sequence of four variables x (ValuePrecede) and, in addition x[2] = 4 (constraint XeqC).

int N = 4;

IntVar[] x = new IntVar[N];

for (int i = 0; i < N; i++) {

x[i] = new IntVar(store, "x["+i+"]", 1, N);

}

store.impose(new XeqC(x[2], 4));

store.impose(new ValuePrecede(1, 4, x));

The resulting 24 solutions is as follows.

[x[0]=1, x[1]=1, x[2]=4, x[3]=1]

[x[0]=1, x[1]=1, x[2]=4, x[3]=2]

[x[0]=1, x[1]=1, x[2]=4, x[3]=3]

[x[0]=1, x[1]=1, x[2]=4, x[3]=4]

[x[0]=1, x[1]=2, x[2]=4, x[3]=1]

[x[0]=1, x[1]=2, x[2]=4, x[3]=2]

[x[0]=1, x[1]=2, x[2]=4, x[3]=3]

[x[0]=1, x[1]=2, x[2]=4, x[3]=4]

[x[0]=1, x[1]=3, x[2]=4, x[3]=1]

[x[0]=1, x[1]=3, x[2]=4, x[3]=2]

[x[0]=1, x[1]=3, x[2]=4, x[3]=3]

[x[0]=1, x[1]=3, x[2]=4, x[3]=4]

[x[0]=1, x[1]=4, x[2]=4, x[3]=1]

[x[0]=1, x[1]=4, x[2]=4, x[3]=2]

[x[0]=1, x[1]=4, x[2]=4, x[3]=3]

[x[0]=1, x[1]=4, x[2]=4, x[3]=4]

[x[0]=2, x[1]=1, x[2]=4, x[3]=1]

[x[0]=2, x[1]=1, x[2]=4, x[3]=2]

[x[0]=2, x[1]=1, x[2]=4, x[3]=3]

[x[0]=2, x[1]=1, x[2]=4, x[3]=4]

[x[0]=3, x[1]=1, x[2]=4, x[3]=1]

[x[0]=3, x[1]=1, x[2]=4, x[3]=2]

[x[0]=3, x[1]=1, x[2]=4, x[3]=3]

[x[0]=3, x[1]=1, x[2]=4, x[3]=4]

Member constraint enforces that an element e is present on a list x and is defined as follows.

Member(IntVar[] x, IntVar e)

where x is a list of variables and e is the element. The constraint is primitive and can be used in different context as a parameter of other constraints.

Channeling constraints are generalizations of simple Reified and Implies constraints.

ChannelReif enforces the following constrains in one global constraint.

∀i x=i<=>bi

The above constraints are specified using a single ChannelReif constraint.

ChannelReif(x, b);

where x is a IntVar and b is vector of 0..1 variables.

Similarly, ChannelImply enforces the following constrains in one global constraint.

∀i bi=>x=i

More advanced versions of these constraints make it possible to specify possible values checked for variable x. For more details check API documentation.

Decomposed constraints do not define any new constraints and related pruning algorithms. They are translated into existing JaCoP constraints. Sequence and Stretch constraints are decomposed using Regular constraint.

Decomposed constraints are imposed using imposeDecomposition method instead of ordinary impose method.

Sequence constraint restricts values assigned to variables from a list of variables in such a way that any sub-sequence of length q contains N values from a specified set of values. Value N is further restricted by specifying min and max allowed values. Value q, min and max must be integer.

The following code defines restrictions for a list of five variables. Each sub-sequence of size 3 must contain 2 (min = 2 and max = 2) values 1.

IntVar[] var = new IntVar[5];

for (int i=0; i<var.length; i++)

var[i] = new IntVar(store, "v"+i, 0, 2);

store.imposeDecomposition(new Sequence(var, //variable list

new IntervalDomain(1,1), //set of values

3, // q, sequence length

2, // min

2 // max

));

There exist ten solutions: [01101, 01121, 10110, 10112, 11011, 11211, 12110, 12112, 21101, 21121]

Stretch constraint defines what values can be taken by variables from a list and how sub-sequences of these values are formed. For each possible value it specifies a minimum (min) and maximum (max) length of the sub-sequence of these values.

For example, consider a list of five variables that can be assigned values 1 or 2. Moreover we constraint that the sub-sequence of value 1 must have length 1 or 2 and the sub-sequence of value 2 must have length either 2 or 3. The following code specifies these restrictions.

IntVar[] var = new IntVar[5];

for (int i=0; i<var.length; i++)

var[i] = new IntVar(store, "v"+i, 1, 2);

store.imposeDecomposition(

new Stretch(new int[] {1, 2}, // values

new int[] {1, 2}, // min for 1 & 2

new int[] {2, 3}, // max for 1 & 2

var // variables

));

This program produces six solutions: [11221, 11222, 12211, 12221, 22122, 22211]

The Lex constraint enforces ascending lexicographic order between n vectors that can be of different size. The constraints makes it possible to enforce strict ascending lexicographic order, that is vector i must be always before vector i + 1 in the lexicographical order, or it can allow equality between consecutive vectors. This is controlled by the last parameter of the constraint that is either true for strictly ascending lexicographic order or false otherwise. The default is non-strictly ascending lexicographic order when no second parameter is defined.

The following code specifies Lex constraint that holds when the strict ascending lexicographic order holds between four vectors of different sizes.

IntVar[] d = new IntVar[6];

for (int i = 0; i < d.length; i++) {

d[i] = new IntVar(store, "d["+i+"]", 0, 2);

}

IntVar[][] x = { {d[0],d[1]}, {d[2]}, {d[3],d[4]}, {d[5]} };

store.imposeDecomposition(new Lex(x, true));

This program produces nine following solutions.

[[0, 0], [1], [1, 0], [2]],

[[0, 0], [1], [1, 1], [2]],

[[0, 0], [1], [1, 2], [2]],

[[0, 1], [1], [1, 0], [2]],

[[0, 1], [1], [1, 1], [2]],

[[0, 1], [1], [1, 2], [2]],

[[0, 2], [1], [1, 0], [2]],

[[0, 2], [1], [1, 1], [2]],

[[0, 2], [1], [1, 2], [2]]

Soft-alldifferent makes it possible to violate to some degree the alldifferent relation. The violations will come at a cost which is represented by cost variable. This constraint is decomposed with the help of network flow constraint.

There are two violation measures supported, decomposition based, where violation of any inequality relation between any pair contributes one unit of cost to the cost metric. The other violation measure is called variable based, which simply states how many times a variable takes value that is already taken by another variable.

The code below imposes a soft-alldifferent constraint over five variables with cost defined as being between 0 and 20.

IntVar[] x = new IntVar[5];

for (int i=0; i< x.length; i++)

x[i] = new IntVar(store, "x"+i, 1, 4);

IntVar cost = new IntVar(store, "cost", 0, 20);

store.imposeDecomposition(new SoftAlldifferent(x, cost,

ViolationMeasure.DECOMPOSITION_BASED );

Soft-GCC constraint makes it possible to violate to some degree GCC constraint. The Soft-GCC constraint requires number of arguments. In the code example below, vars specify the list of variables, which values are being counted, and the list of integers countedValues specifies the values that are counted. Values are counted in two counters specified by a programmer. The first list of counter variables, denoted by hardCounters in our example, specifies the hard limits that can not be violated. The second list, softCounters, specifies preferred values for counters and can be violated. Each position of a variable on these lists corresponds to the position of the value being counted on list countedValues. In practice, domains of variables on list hardCounters should be larger than domains of corresponding variables on list softCounters.

Soft-GCC accepts only value based violation metric that, for each counted value, sums up the shortage or the excess of a given value among vars. There are other constructors of Soft-GCC constraint that allows to specify hard and soft counting constraints in multitude of different ways.

store.imposeDecomposition(new SoftGCC(vars, hardCounters,

countedValues, softCounters,

cost, ViolationMeasure.VALUE_BASED));

JaCoP provides a library of set constraints in library org.jacop.set. This implementation is based on set intervals and related operations as originally presented in [9] and the first version has been implemented as a diploma project1 . JaCoP definition of set intervals, set domains and set variables is presented in section 4.1. Available constraints are discussed in section 4.2. Search, using set variables, is introduced in section 4.3.

Set is defined as an ordered collection of integers using class org.jacop.core.IntervalDomain and a set domain as abstract class org.jacop.set.core.SetDomain. Currently, there exist only one implementation of set domain as a set interval, called BoundSetDomain. The set interval for BoundSetDomain d is defined by its greatest lower bound (glb(d)) and its least upper bound (lub(d)). For example, set domain d = {{1}..{1..3}} is defined with glb(d) = {1}, set containing element 1, and lub(d) = {1..3}, set containing elements 1, 2 and 3. This set domain represent a set of sets {{1},{1..2},{1,3},{1..3}}. Each set domain to be correct must have glb(d) ⊆ lub(d). glb(d) can be considered as a set of all elements that are members of the set and lub(d) specifies the largest possible set.

The following statement defines set variable s for the set domain discussed above.

SetVar s = new SetVar(store, "s",

new BoundSetDomain(new IntervalDomain(1,1),

new IntervalDomain(1,3)));

BoundSetDomain can specify a typical set domain, such as d = {{}..{1..3}}, in a simple way as

SetVar s = new SetVar(store, "s", 1, 3);

and an empty set domain as

SetVar s = new SetVar(store, "s", new BoundSetDomain());

Set domain can be created using IntervalDomain and BoundSetDomain class methods. They make it possible to form different sets by adding elements to sets.

JaCoP implements a number of set constraints specified in appendix A.2. Constraints AinS, AeqB and AinB are primitive constraints and can be reified and used in other constraints, such as conditional and logical. Other constraints are treated as ordinary JaCoP constraints.

Consider the following code that uses union constraint.

SetVar s1 = new SetVar(store, "s1",

new BoundSetDomain(new IntervalDomain(1,1),

new IntervalDomain(1,4)));

SetVar s2 = new SetVar(store, "s2",

new BoundSetDomain(new IntervalDomain(2,2),

new IntervalDomain(2,5)));

SetVar s = new SetVar(store, "s", 1,10);

Constraint c = new AunionBeqC(s1, s2, s);

It performs operation {{1}..{1..4}} ⋃ {{2}..{2..5}} = {{}..{1..10}} and produces {{1}..{1..4}} ⋃ {{2}..{2..5}} = {{1..2}..{1..5}}. This represents 108 possible solutions.

Set variables will require different search organization. Basically, during search the decisions will be made whether an element belongs to a set or it does not belong to this set.

JaCoP still uses DepthFirstSearch but needs different methods for set variable selection implementing ComparatorVariable and a method for value selection implementing Indomain. The special methods are specified in appendix B.2. In addition, variable selection methods MostConstrainedStatic and MostConstrainedDynamic will also work.

An example search can be specified as follows.

Search<SetVar> search = new DepthFirstSearch<SetVar>();

SelectChoicePoint<SetVar> select = new SimpleSelect<SetVar>(

vars,

new MinLubCard<SetVar>(),

new MaxGlbCard<SetVar>(),

new IndomainsetMin<SetVar>());

search.setSolutionListener(new SimpleSolutionListener<SetVar>());

boolean result = search.labeling(store, select);

JaCoP provides a library of floating point constraints in library org.jacop.floats. This implementation is based on floating point intervals and related constraints.

Floating point domain is defined in a similar way as integer variable domain as an ordered list of floating point intervals in class org.jacop.floats.core.FloatIntervalDomain. This class implements an abstract class org.jacop.floats.core.FloatDomain that in turn extends ordinary JaCoP domain defined in org.jacop.core.Domain. The floating point variable is defined using the floating point interval as follows.

FloatVar f = new FloatVar(store, "f", 0.0, 10.0);

This java declaration defines floating point variable f with domain 0.0..10.0.

The float variables are used in floating point constraints, discussed in the next section. Special attention is paid to precision of floating point operations. Basically, JaCoP considers a floating point interval to represent a single value if a difference between max and min values of a variable is lower than an epsilon value (ϵ). Epsilon is calculated based on a given precision and ulp value (ulp is a floating point specific value know as “unit at last position”). The precision is defined in org.jacop.core.FloatDomain and can be set-up and obtained using following methods FloatDomain.setPrecision(double p) and FloatDomain.precision(). Epsilon is defined as a maximum of ulp and the precision. Class FloatDomain defines also min and max values of floating variables and values of π and e.

JaCoP implements a number of floating point constraints specified in appendix A.3.

Constraints PeqC, PeqQ, PgtC, PgtQ, PgteqC, PgteqQ, PltC, PltQ, PlteqC, PlteqQ, PneqC, PneqQ, PplusCeqR,PplusQeqR, PminusCeqR, PminusQeqR and LinearFloat are primitive constraints and can be reified and used in other constraints, such conditional and logical. Other constraints are treated as ordinary JaCoP constraints.

Consider the following code that uses division constraint.

FloatDomain.setPrecision(1E-4);

FloatVar a = new FloatVar(store, "a", 0.1, 3.0);

FloatVar b = new FloatVar(store, "b", -0.2, 6.0);

FloatVar c = new FloatVar(store, "c", -1000, 1000);

store.impose(new PdivQeqR(a, b, c));

It performs operation {0.100..3.000} / {-0.200..6.000} and produces a pruned domain for c::{-1000.0000..-0.5000, 0.0167..1000.0000}, assuming precision 10-4.

Floating point variables will require special search definition. Basically, a domain split search (bisection) is used together with consistency checking to find a solution.

JaCoP standard search for floating point variables still uses DepthFirstSearch but needs to use specific methods for selection of choice points as well as variable selection. org.jacop.floats.search.SplitSelectFloat is used to define how intervals of floating point variables will be split. Default behavior of this method is to split an interval in a middle and try the left part first. If variable leftFirst is set to false the right interval will be checked first. The variables will be selected based on the variable selection heuristic defined as a parameter for SplitSelectFloat class. There exist several methods to select variables specific for FloatVar. They are defined in org.jacop.floats.search. Other general methods that are not specific for a given domain can also be used here, for example org.jacop.search.MostConstrainedStatic.

An example search can be specified as follows.

DepthFirstSearch<FloatVar> search = new DepthFirstSearch<FloatVar>();

SplitSelectFloat<FloatVar> select = new SplitSelectFloat<FloatVar>(

store, x,

new LargestDomainFloat<FloatVar>());

search.setSolutionListener(new PrintOutListener<FloatVar>());

boolean result = search.labeling(store, select);

Minimization, using DepthFirstSearch class, is done as for other variables by calling labeling method with the third parameter defining cost variable. Both IntVar and FloatVar can be used to defined cost criteria for minimization.

JaCoP offers also another minimization method. This method uses cost variable to lead the minimization. The method splits the domain of cost variable into two parts and then checks, using standard DepthFirstSearch labeling method if there is a solution in this part. If there is a solution in this part it continues to split the current interval and checks the left interval again. If there is no solution in this part (depth-first-search return false) it will try the right interval. The search finishes when the cost variable is ground (the difference between max and min is lower or equal epsilon). An example code is presented below.

DepthFirstSearch<FloatVar> search = new DepthFirstSearch<FloatVar>();

SplitSelectFloat<FloatVar> select = new SplitSelectFloat<FloatVar>(

store, x,

new LargestDomainFloat<FloatVar>());

Optimize min = new Optimize(store, search, select, cost);

boolean result = min.minimize();

It is also possible to define SplitSelectFloat with variable selection heuristic (the last parameter) equal null. In this case a default method will be used that is round-robin selection. The variables are selected in a round-robin fashion until their domains will not become ground.

The following code defines equation x2 + sin(1∕x3) = 0 together with the search that finds all 318 solutions.

FloatDomain.setPrecision(1.0e-14);

FloatDomain.intervalPrint(false);

Store store = new Store();

FloatVar x = new FloatVar(store, "x", 0.1, 1.0);

FloatVar zero = new FloatVar(store, 0,0);

FloatVar one = new FloatVar(store, 1,1);

// x*x + sin(1.0/(x*x*x)) = 0.0;

FloatVar[] temp = new FloatVar[4];

for (int i=0; i<temp.length; i++)

temp[i] = new FloatVar(store, "temp["+i+"]", -1e150, 1e150);

store.impose(new PmulQeqR(x, x, temp[0]));

store.impose(new PmulQeqR(x, temp[0], temp[1]));

store.impose(new PdivQeqR(one, temp[1], temp[2]));

store.impose(new SinPeqR(temp[2], temp[3]));

store.impose(new PplusQeqR(temp[0], temp[3], zero));

DepthFirstSearch<FloatVar> search = new DepthFirstSearch<FloatVar>();

SplitSelectFloat<FloatVar> s = new SplitSelectFloat<FloatVar>(store,

new FloatVar[] {x}, null);

search.setSolutionListener(new PrintOutListener<FloatVar>());

search.getSolutionListener().searchAll(true);

search.labeling(store, s);

The last solution is x = 0.65351684593772.

JaCoP package org.jacop.floats offers also experimental extensions. They include a method to generate constraints that define the derivative of a function, represented by constraints, and a interval Newton method.

JaCoP can do symbolic (partial) derivatives of functions, that is using the defined constraints for a given function and a variable defining the result of the function, JaCoP will generate constraints that define a function for a derivative of the original function. There is a complication in this process since constraints are not functions and sometime it is difficult or impossible to find out which constraint defines a function for a given variable. In such situations JaCoP uses a heuristic to find out this but it is also possible to define this explicitly. The following examples explains the process of computing derivatives of constrains.

Set<FloatVar> vars = new HashSet<FloatVar>();

vars.add(x1);

vars.add(x2);

Derivative.init(store);

// Derivative.defineConstraint(x1x1, c0);

// Derivative.defineConstraint(x2x2, c1);

// Derivative.defineConstraint(x1x1x1x1, c2);

// Derivative.defineConstraint(x2x2x2x2, c3);

// ================== df/dx1 =================

FloatVar fx1 = Derivative.getDerivative(store, f, vars, x1);

// ================== df/dx2 =================

FloatVar fx2 = Derivative.getDerivative(store, f, vars, x2);

In the above example, JaCoP will generate two partial derivatives  and

and  . Set vars

is used to keep all variables of function defined by variable f. The function defined by

variable f is usually defined by several constraints using temporary variables, such as x1x1,

x2x2, x1x1x1x1 and x2x2x2x2. In such situations JaCoP tries, using heuristics, to find out

what constraint defines a given temporary variable. Sometimes it is not possible and the

programmer needs to define it, as indicated by the commented code and methods

Derivative.defineConstraint. For example, constraint c0 defines variable x1x1. Method

getDerivative of class Derivative generates a variable that defines the result of the

derivative.

. Set vars

is used to keep all variables of function defined by variable f. The function defined by

variable f is usually defined by several constraints using temporary variables, such as x1x1,

x2x2, x1x1x1x1 and x2x2x2x2. In such situations JaCoP tries, using heuristics, to find out

what constraint defines a given temporary variable. Sometimes it is not possible and the

programmer needs to define it, as indicated by the commented code and methods

Derivative.defineConstraint. For example, constraint c0 defines variable x1x1. Method

getDerivative of class Derivative generates a variable that defines the result of the

derivative.

Derivatives, can be used for setting additional constraints on solutions. For example, when looking for minimum or maximum of a function or in the interval Newton method.

Interval Newton methods combine classical Newton method with interval analysis [13] and are used to provide better bounds on variables.

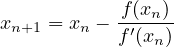

Traditionally, univariate Newton method computes a solution for equation f(x) = 0 using the following approximation.

|

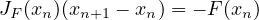

This can be extended by using vector x and a Jacobian matrix instead of f′(x). Furthermore one can extend it to interval arithmetic. This is implemented in JaCoP using “constraint” EquationSystem. This constraint takes as parameters a vector of functions and variables of these functions. The constraints computes then all partial derivatives and form a set of equations that are later solved, if possible, by interval Gauss-Seidel method.

This can be formalized as system of linear equations that, when solved, provides bounds on values (xn+1 - xn) of variables of equations.

|

Below, constraint eqs specifies a set of two functions f0 and f1 of two variables x1 and x2. Constraint eqs will try to prune domains of variables x1 and x2.

EquationSystem eqs = new EquationSystem(store,

new FloatVar[] {f0, f1}, new FloatVar[] {x1, x2});

store.impose(eqs);